Latency in Spatial Audio

A roundtrip evaluation of Latency in Apple’s AirPods Pro when they’re used with the Spatial Audio feature of IOS14

There has been a lot of interest in Apple’s AirPods Pro and Spatial Audio¹ feature in IOS14 recently. As developers of Spatial Audio algorithms were intrigued to establish how much latency there is in Apple’s and to have an empirical account of its detectability. Read on to find out why detectable latency is important, our motivation for knowing this, the test we constructed, the results of our test, and the implications for latency in spatial experiences.

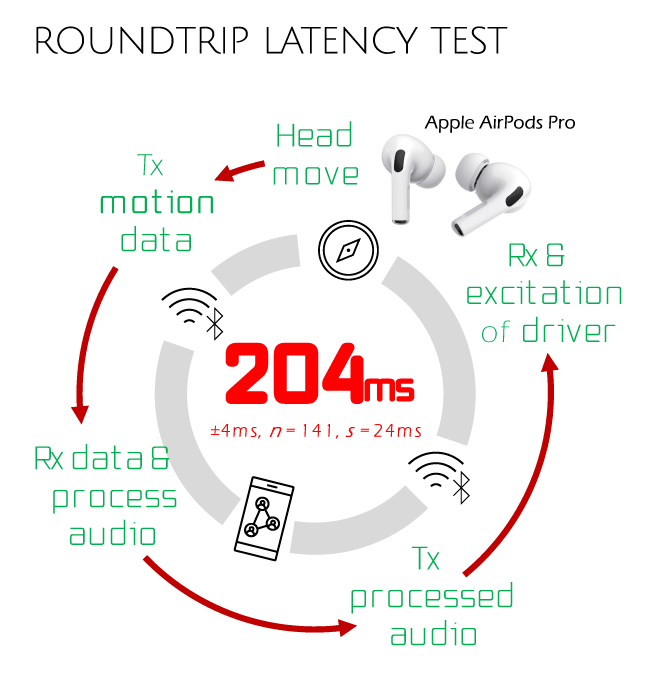

TL:DR It’s approximately 204ms. For undetectable latency, it should be less than 30ms, so Apple’s solution is 7x slower than desired if you want motion-to-audio ‘realism’ for a naturalistic listening experience.

Spatial Audio is at its most convincing when combined with head-tracking. Most readers will be familiar with head-tracking in VR/MR headsets. Data captured from accelerometers, gyroscopes, and occasionally magnetometers, providing state information about the orientation of the head — the axes representing the scope movement with 3 Degrees of Freedom (3DoF): left/right(yaw); back/front (pitch); and up/down (roll). This data is used to define the positional relationship between the user’s eyes or ears and any virtual content that is spatialised around them, visual or audio.

More recently, we are beginning to see headphones/earphones and other audio-based wearables come with an inbuilt Inertial Measurement Unit (IMU) — an electronic device that contains an accelerometer, gyroscope, and sometimes a magnetometer, in a miniaturized chip format that provides data about force, angular rate and so on to establish direction/orientation in 3-space. Although the IMU in a headphone may be there for other purposes, such as ‘activity tracking’ (is the wearer moving or still and so on), it immediately lends itself to spatial audio presentations. We’re expecting motion-tracked spatial audio for headphone technology to become the norm over the next few years.

Bose’s IC35 Headphones and their Frames sunglasses-cum-headphone wearable are good examples of products with an IMU that is used to send orientation data to a playback device to get back spatial audio rendered as a binaural stream (we call this Circular Processing). Unfortunately, Bose’s $50m flagship AR program was shelved in June because it “didn’t become what we envisioned." This was widely thought to be because of latency issues associated with the round-trip (see here for interesting admission) but the devices still contain the IMU and they are expected to bring new services forward when they have solved their experiential problems.

Other products like the forthcoming Zoku Hy also have an integrated IMU but they do the spatial audio processing on-board removing any need for round-trips to a device. In this mode, the headset is processing audio that has already arrived over wireless (we call this Linear Processing), and consequently, listeners experience very low latency that is below the level of detectability.

Back in June 2020, Apple announced they were enabling Spatial Audio for the AirPods Pro. The announcement revealed that AirPods Pro, which had been available to purchase to since December 2019, had an integrated IMU and in the forthcoming IOS14 update a ‘spatial audio’ feature would be enabled on certain versions iPhone/iPad/AppleTV.

The emphasis of the Apple announcement was that users would get ‘theatre-like’ spatial audio akin to surround sound in cinema theatres. Neat marketing of course — who doesn’t want their personal cinema experiences to be like the best surround systems we find in cinema theatres? But it’s not the sound system they’re offering but the kinetic experience associated with moving your head while the speakers are in a fixed position. When you move your head in the theatre, the speakers are fixed in position on the walls but the sound in relation to your head has moved — if you look away 180° from the screen, the dialogue will be behind you.

At Kinicho, we have been developing the next generation of algorithms for processing kinetic volumetric spatial audio using both software and hardware solutions. Earlier in 2020, we were successful in porting our Sympan® Sonic Reality Engine® onto a low-power DSP chip that is used by many TWS vendors. Integrated DSP solutions are critical to enabling the Linear Processing approach needed to guarantee ultra-low latency spatial audio experiences for TWS solutions. Ultra-low latency is the key engineering focuses of our revolutionary approach, so we’re naturally inquisitive about latency times in this space.

Researchers at the US Wright-Patterson Air Force Base have been studying head-tracked audio latency for some while — virtual audio is important for mission-critical in-flight information systems and so features heavily in pilot training simulations. In a seminal and well-cited paper², Brungart et al designed and conducted an experiment to test for the detectability of head-tracking latency in virtual audio systems. Their results show that head-tracker latencies smaller than 30ms are required to ensure that motion delays are undetectable.

For anyone wishing to create a natural, realistic head-tracked experience, it's important to be under or close to this 30ms target. As our integrated Sonic Reality Engine® solution performs well with <10ms latency times we were intrigued to know what latency was created in the Apple Spatial Audio ecosystem.

The R&D team at Kinicho constructed a test to evaluate the round-trip latency of Apple’s AirPods Pro. The principal metric of the test that we aimed to capture is the time it takes from the motion of the head — or a proxy of head movement — to a detectable change in sound caused by the motion.

Before we outline our test methodology, it’s useful to understand what is happening in the Apple Spatial Audio eco-system to get a movement of the head to result in a change in the spatial balance of the virtual audio. We have summarised this into 5 distinct phases although each phase has some sub-processes (and we could have cut it up in other ways):

- The process starts with a movement of the head of someone wearing the AirPods Pro.

- The motion generates a state-change on the IMU. The IMU data is sampled³ and transmitted as a stream to the host device over Bluetooth wireless data transmission.

- On the host device, the received motion stream data is made available to Apple’s Audio Framework and is used in rotating the Surround Sound content (5.1, 7.1 or Dolby Atmos) in relation to the Yaw, Pitch and Roll of the head. The rotated surround audio is then decoded into a binaural stream — 2 channels of audio, one for each of ear.

- The 2 channel binaural audio stream is then encoded/compressed and transmitted back to the AirPods Pro.

- When the binaural audio is received back at the AirPods Pro, it is decoded/uncompressed into two channels of raw PCM digital audio. One channel of PCM audio is sent to the ‘slave’ Airpod, the other is delayed on the ‘master’ Airpod to keep them in sync. Each side is then converted from digital to analog audio to excite the driver/speaker of the respective AirPods Pro.

This round-trip process, AirPods Pro to Host Device and back to Airpods Pro, is a continuous circuit and ongoing while Spatial Audio is active — this is why we refer to this as the Circular Processing mode. It is not unique to Apple, most other headphones solutions whether they have an integrated or an external IMU follow this circular process.

The test system that we constructed to capture our observations has 8 components:

- 1 x Sound Pressure Level measure microphones to pick up the audio from the AirPods Pro

- test audio asset — a 1KHz Sine Tone only on the center channel of an uncompressed 5.1 PCM file embedded in .mov container and used to play audio through the test system

- audio software (Reaper DAW and WaveLab) running on Laptop with an ASIO Audio Interface (RME Digiface) and microphone pre-amp (Behringer)

- a rig that holds all the equipment needed for the test — we built this out of a modular construction kit

- a rotating element to act as a proxy for head movement (from herein the ‘head-proxy’)— a mounting system was constructed with the modular construction kit to hold the measurement microphone facing in opposite directions, this was sat on a spindle positioned at the center of the rig so it could be rotated around a pivot to simulate the head movement of 60°

- Additional audio-cabling used to create contact points on the rig to test when the rotating element had moved from its starting position and arrive at its final position, these contact points were connected to the audio recording system via an XLR plug

- a set of AirPods Pro — the right AirPod was attached to a measurement microphone by inverting its smallest silicone-bud and using this as sheaf to enclose it over the microphone’s capsule aperture

- an iPhone 11 Pro running the Air Video HD Server App (serving from the laptop) to play the test-audio .mov and handle the motion in Spatial Audio mode. The .mov will run throughout the test so there’s no latency impact from this process.

The test instrumentation was manually operated and consisted of eight stages:

1. start the test-audio .mov on the iPhone and begin a multichannel recording in Reaper (1 channel for the right AirPod Pro and 1 channel for the contact data)

2. put the head-proxy into its starting position

3. rotate the head-proxy toward its finishing position — when it left the starting position, an electrical circuit breaks and this signal would send a change in current into the contact data channel and recorded as a positive spike… this would also start the motion data transfer from the AirPods Pro initiating a change the audio content to begin differentiating the signal in the AirPod.

4. continuing moving the head-proxy to the finishing position, approximately 60°, pause for a moment for the audio to become stable again

5. repeat steps 2 to 4 until sufficient observations have been made

6. stop the recording process and save the recordings

7. transfer the time aligned recordings to WaveLab and using markers to identify the transients created by the circuit breaking/connection and the drop in amplitude at 1KHz measured in the spectral FFT analysis window

8. Export the markers to Excel to disaggregate the number of samples between each marker, convert the sample differences between events into milliseconds based on a 48KHz sample rate. The latency was calculated as the difference in milliseconds between the short-circuit transient event and the drop in amplitude.

141 observations were collected which formed a normal distribution⁴. The average (mean) latency was 204ms. We calculated the confidence interval of with 0.05 level to be 4ms — so 95% of the time we would expect the mean to fall between 200ms and 208ms. The samples also had a low standard error of 2ms so we expect the normal operation of the AirPods Pro with Apple’s Spatial Audio ecosystem would ordinarily be close to 204ms.

This test did not evaluate any other aspect of the Apple Spatial Audio eco-system other that the round-trip latency using the AirPods Pro— so we’re not commenting on the overall experience. We’re only observing that the round trip latency is approximately 7x slower than the target latency of sub 30ms that is considered to be undetectable following the findings from Brugart et al.

Some users may not notice the latency in normal use—this is not the same as it being undetectable, whether users perceive the delay in normal use is a different matter. However, at 7x slower, we think there is a strong likelihood that the overall experience may be diminished as users become more familiar with the ecosystem and start to notice the latency. Of course, even where the latency is noticeable, some users will accept this as a necessary trade-off for the experience.

For developers who are looking to build products on top of this eco-system — we have early prototypes in Spatial Audio production, VR, and for Web interaction so far — it’s worth noting that you might expect latency a little higher depending on how you are transporting the Yaw/Pitch/Roll data into your application and then what you are doing with it once there. It’s worth taking into account that some activities might make the latency more noticeable than others.

A final note

We also conducted another related test, which we don’t plan to blog about as we think a comment here is sufficient. We followed the method set out by Stephen Coyle⁵ to test the transmission of audio from the host device to the AirPods Pro. This is not related to Spatial Audio per se but we anticipate this delay to be the same for the latency on the return leg of the round-trip where audio is encoded/compressed to be transmitted from the host device to the Airpod Prods before being converted to an analog signal used to excite the driver. We repeated this test 131 times and observed the same mean latency of 144ms as Coyle. We don’t have a breakdown of how the other 60ms is spent, although we’re thinking about a viable test for that. We’ll blog about that if we come up with any insightful observations.

We will also publish another two blogs on the AirPods Pro shortly:

- a blog giving users some audio assets and instructions on how to experience the latency for themselves without the need to construct a test-rig.

- a general review of the AirPods Pro where we offer our subjective review of this product

Follow us on Twitter at @kinicho to stay up-to-date with our latest news.

¹ We may do another post on what is meant by ‘Spatial Audio’ in Apple’s context another time. For this post, we just accept it as a generic description to mean any of 3D audio, positional audio, directional audio, immersive audio or volumetric audio when consumed binaurally over headphones/earphones or other binaural wearable solution that presents discrete left/right channel to the ears to exploit differences in time, intensity or phase between each ear, thus creating some form of perception of space.

² THE DETECTABILITY OF HEADTRACKER LATENCY IN VIRTUAL AUDIO DISPLAYS; Brungart, D, Simpson, B & Kordik, A. https://b.gatech.edu/3cL0sgu

³ We’re unsure of the control-rate for this data capture as it doesn’t appear as a setting anywhere that we have found, but we expect it to be around 50Hz.

⁴ The observations formed a normal distribution so we can use basic descriptive measures to infer the parameters of the ecosystem and be confident that our findings are repeatable. To test for Normality we used the Null Hypothesis of the Chi-Square Goodness of Fitness test. If the P-value is less 0.05 (the inverse of the 95% confidence level) then the data is not normal. The sample data were arranged in 9 bins of equal distance between the minimum and maximum value. This observed distribution was measured against an expected outcome derived from the Normal Cumulative Density Function to derive a P-Value of 0.26. Consequently, we reject the Null Hypothesis and conclude that the sample of observations was a Normal distribution.